Coding Assistants

Coding AssistantsAlibaba Releases Qwen3-Coder-Next, Efficient Coding Model

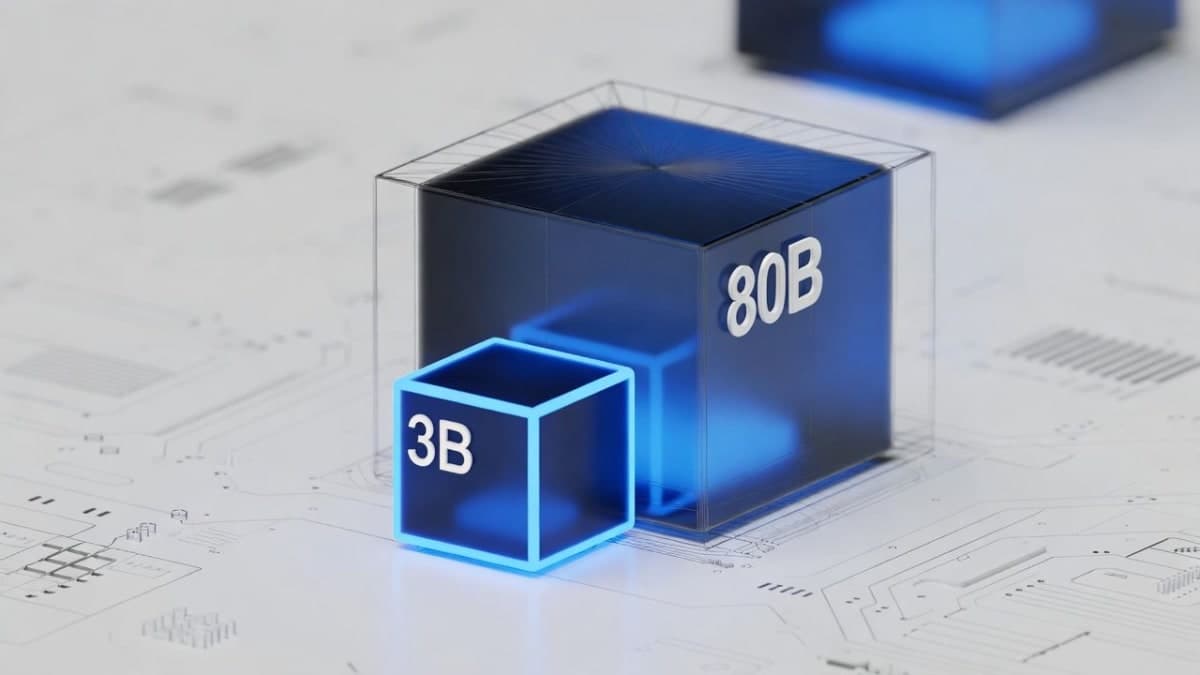

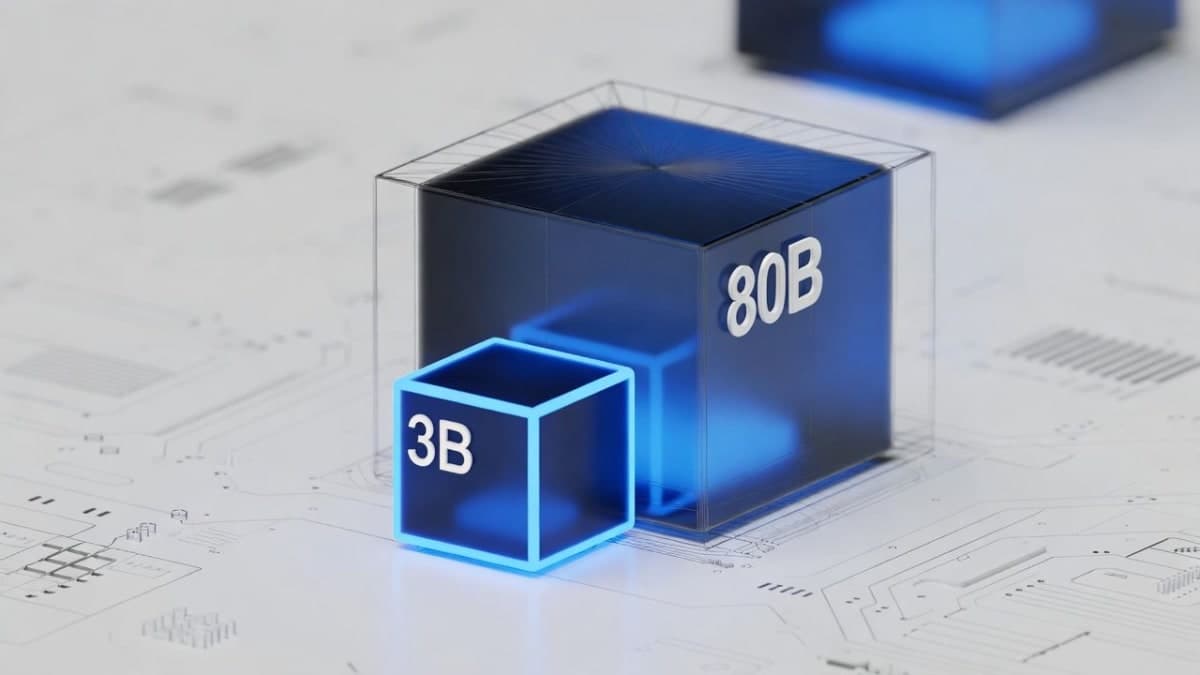

Alibaba's Qwen3-Coder-Next activates just 3B of 80B parameters, hitting 70.6% on SWE-Bench while running on consumer hardware.

Andrés MartínezFeb 4, 20262 min

4 articles tagged with "Moe"

Coding Assistants

Coding AssistantsAlibaba's Qwen3-Coder-Next activates just 3B of 80B parameters, hitting 70.6% on SWE-Bench while running on consumer hardware.

LLMs & Foundation Models

LLMs & Foundation ModelsShanghai startup's new open-source model claims benchmark wins over DeepSeek V3.2 with dramatically smaller active parameter count

Image Generation

Image GenerationThe Instruct version brings Chain-of-Thought processing to the 80B-parameter open-source model.

LLMs & Foundation Models

LLMs & Foundation ModelsThe model hits #8 globally on LMArena and #2 in math reasoning, though Western labs have already moved on to newer models.

Get the latest AI news, reviews, and deals delivered straight to your inbox. Join 100,000+ AI enthusiasts.

By subscribing, you agree to our Privacy Policy. Unsubscribe anytime.