Tencent released HunyuanImage 3.0-Instruct today, adding image-to-image generation and reasoning capabilities to its already large open-source text-to-image model. The update follows the base model's September 2025 release.

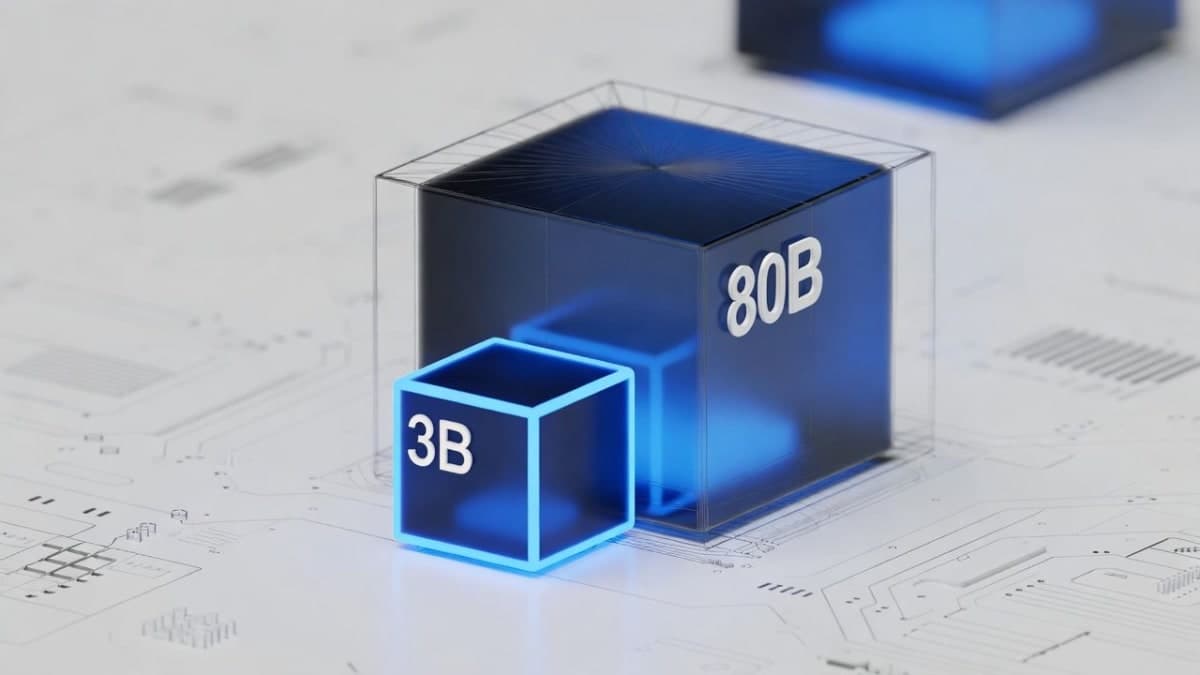

The Instruct variant introduces native Chain-of-Thought processing. Before generating an image, the model interprets the prompt, runs an internal reasoning phase, then executes. Tencent trained this capability using a multi-stage pipeline including DPO for reducing distortions and MixGRPO for online reinforcement learning across style, lighting, and text-image alignment. The architecture remains an 80B-parameter MoE with 64 experts, 13B active per token.

New features include image editing with element preservation, style transfer, and multi-image fusion. The model card shows showcase examples of adding or removing objects while leaving surrounding content intact. A distilled checkpoint is also available for faster inference at 8 steps. Compute requirements remain steep: Tencent recommends 3-4 GPUs with 80GB VRAM each.

The Tencent Hunyuan Community License applies. Commercial use is permitted, but products with over 100 million monthly active users need separate licensing. The license excludes EU, UK, and South Korea.

The Bottom Line: The Instruct release adds editing capabilities to what Tencent calls the largest open-source image generation model, though the hardware requirements limit accessibility.

QUICK FACTS

- 80B total parameters, 13B active per token (MoE with 64 experts)

- Released January 26, 2026; base model launched September 28, 2025

- Requires 3-4× 80GB GPUs for inference

- Distilled checkpoint available for 8-step generation

- License excludes EU, UK, South Korea; 100M+ MAU products need separate approval