Alibaba's Qwen team released Qwen3-Coder-Next, an open-weight coding model built for running locally. The model weights landed on Hugging Face under Apache 2.0, joining the broader Qwen3-Coder family that includes the larger 480B-parameter flagship.

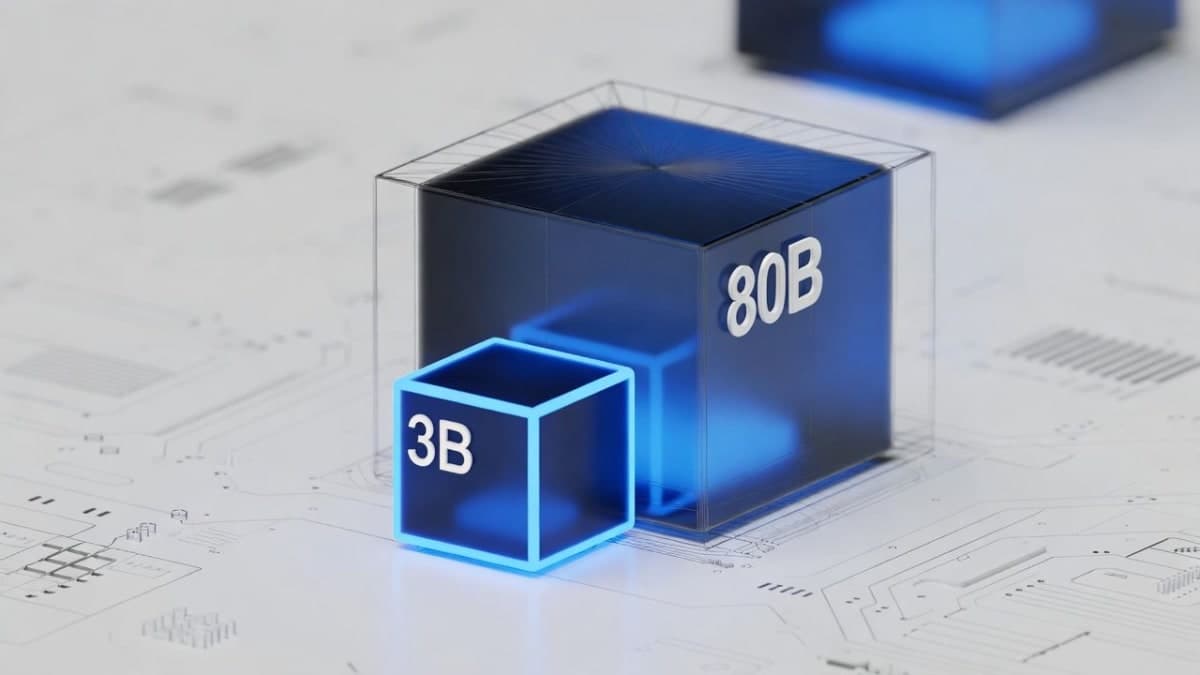

The architecture is aggressively sparse. Qwen3-Coder-Next has 80 billion total parameters but activates only 3 billion per token through a Mixture-of-Experts setup. The team claims performance comparable to models running 10-20x more active parameters, which independent benchmarks partly confirm: 70.6% on SWE-Bench Verified using SWE-Agent, trailing GLM-4.7's 74.2% but matching DeepSeek-V3.2 at 70.2%. Those competitors activate hundreds of billions of parameters.

Hardware requirements matter here. According to Unsloth's documentation, a 4-bit quantized version needs roughly 46GB of RAM or unified memory. That's a high-end Mac Studio or a multi-GPU rig, not a laptop, but it's far cheaper than API calls for sustained coding sessions. The model supports 256K context natively.

The training approach differs from typical code completion models. Qwen describes an "agentic training" pipeline using 800,000 verifiable coding tasks mined from GitHub pull requests, paired with containerized execution environments for reinforcement learning. The model learns to plan, call tools, and recover from failures across long horizons rather than just predicting the next token.

Integration options include SGLang and vLLM for server deployment, plus GGUF quantizations via llama.cpp for local use. The GitHub repo provides setup instructions for pairing it with Claude Code and Cline through OpenAI-compatible endpoints.

The Bottom Line: Qwen3-Coder-Next trades raw benchmark leadership for dramatically lower inference costs, making it the current efficiency champion for developers who want coding agents running on their own hardware.

QUICK FACTS

- 80B total parameters, 3B activated per token

- 70.6% on SWE-Bench Verified (self-reported via SWE-Agent scaffold)

- 256K native context, extendable to 1M

- ~46GB RAM needed for 4-bit quantized version

- Apache 2.0 license