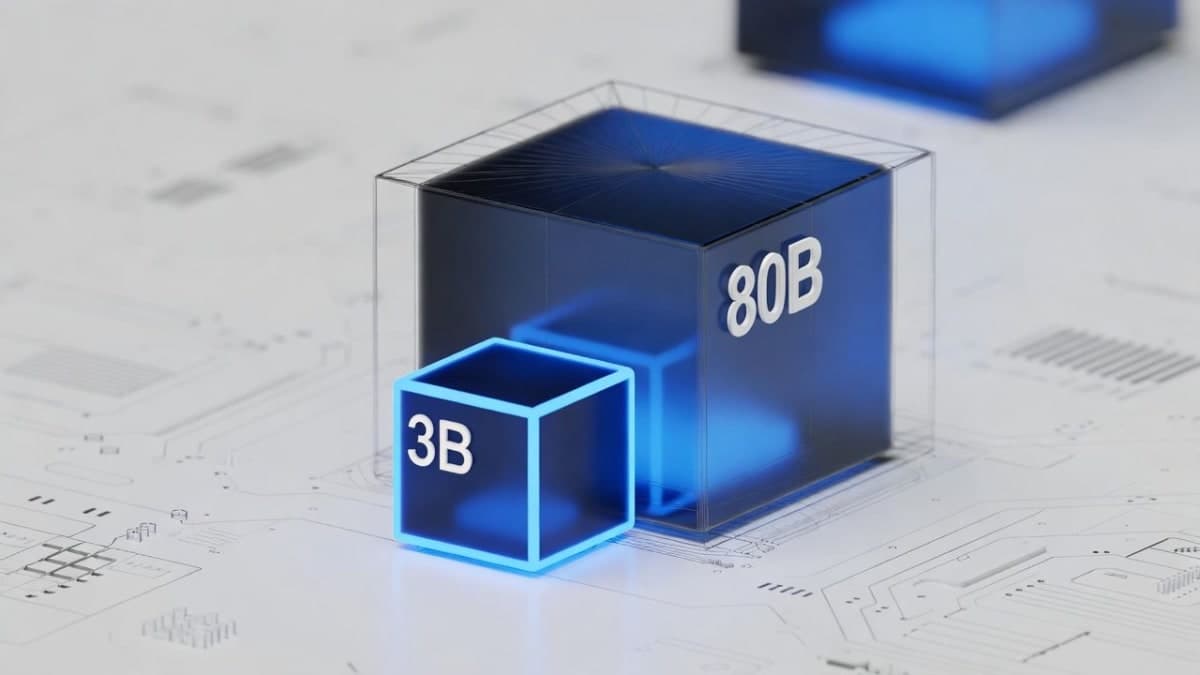

Baidu released PaddleOCR-VL-1.5 on January 29, 2026, and it immediately claimed the top spot on OmniDocBench v1.5 with 94.5% accuracy. The model has 0.9 billion parameters. Qwen3-VL has 235 billion. Gemini 3 Pro won't even disclose its parameter count.

The numbers that matter

The benchmark covers nine document types including academic papers, textbooks, handwritten notes, and newspapers. It evaluates text recognition, table parsing, formula extraction, and reading order detection. PaddleOCR-VL-1.5 leads in all four categories, according to Baidu's technical report.

What caught my attention was the comparison against Gemini 3 Pro and Qwen3-VL-235B-A22B-Instruct. Baidu evaluated these independently since they weren't on the official leaderboard, which is worth noting. But even with that caveat, a sub-1B model matching or exceeding models with hundreds of billions of parameters isn't supposed to happen. The parameter efficiency here is genuinely unusual.

The previous version, PaddleOCR-VL, already topped the benchmark in October 2025. This update pushes accuracy from roughly 90.67% to 94.5%. Most of that gain comes from improved table and formula recognition, areas where the original struggled against specialized tools.

Crumpled papers and bad lighting

Here's the thing about most OCR benchmarks: they test pristine scans. Real documents get photographed at angles, under fluorescent lights, while folded in someone's pocket.

Baidu created a companion benchmark called Real5-OmniDocBench specifically to test these conditions. It covers scanning artifacts, skew, warping, screen photography, and variable illumination. PaddleOCR-VL-1.5 leads across all five scenarios.

The model introduces what Baidu calls "irregular-shaped localization," essentially polygonal bounding boxes instead of rectangles. When you photograph a contract that's slightly bent, the text regions aren't rectangular anymore. Traditional OCR tools struggle with this. The new approach handles trapezoidal and curved text regions natively.

Whether this translates to real-world performance depends heavily on your specific documents. Benchmark wins are nice, but I'd want to see independent testing on actual enterprise document pipelines before getting too excited.

What it actually does

The architecture combines a layout detection model (PP-DocLayoutV3) with a vision-language recognition model built on ERNIE-4.5-0.3B. The layout model identifies text blocks, tables, formulas, and charts, then establishes reading order. The VLM handles recognition.

New capabilities in version 1.5 include seal recognition (common in Chinese business documents), text spotting for scene text, and automatic cross-page table merging. Language support expanded to 111 languages, adding Tibetan and Bengali.

Model weights are available on Hugging Face under Apache 2.0 licensing. The GitHub repository has 62,000 stars and active development.

The inference cost question

Processing 512 PDF documents on a single A100 GPU, PaddleOCR-VL-1.5 completed in 2.16 hours including PDF rendering and Markdown generation. MinerU took 4.18 hours for the same task. Gemini 3 Pro took 2.79 hours but achieved lower accuracy.

The model supports vLLM and SGLang backends for optimized serving. AMD has already published deployment guides for ROCm, claiming day-zero support.

Small parameter count translates directly to lower inference costs. For high-volume document processing, the difference between a 0.9B model and a 235B model isn't academic. It's the difference between a viable production deployment and a proof of concept.

Context

Document parsing has become surprisingly competitive over the past year. DeepSeek released DeepSeek-OCR in October 2025, emphasizing token compression for long documents. Google's Document AI and Amazon Textract continue dominating enterprise deployments. The open-source ecosystem now includes PaddleOCR, MinerU, and various VLM-based approaches.

PaddleOCR-VL-1.5 positions itself as a practical middle ground: better accuracy than general-purpose VLMs, lower resource requirements than massive multimodal models, and open weights for those who need to run locally.

The FTC deadline for updated AI model disclosure requirements is March 15, 2026. That's probably unrelated, but the timing of this release two days ago means Baidu's benchmark claims will face scrutiny as part of the broader regulatory review.