The Allen Institute for AI released SERA on January 27, a family of open-source coding agents that solve real GitHub issues at rates competitive with models costing orders of magnitude more to train. The flagship SERA-32B hits 54.2% on SWE-Bench Verified at 64K context, while the full training recipe runs about $400 on commodity cloud GPUs.

What SERA actually does

Coding agents differ from code completion tools in a fundamental way: they navigate entire repositories, understand relationships between files, and produce patches that pass existing tests. SWE-Bench Verified, the benchmark everyone references for this capability, consists of 500 real GitHub issues that have been manually verified as solvable. A model needs to read the issue, explore the codebase, modify the right files, and avoid breaking anything else.

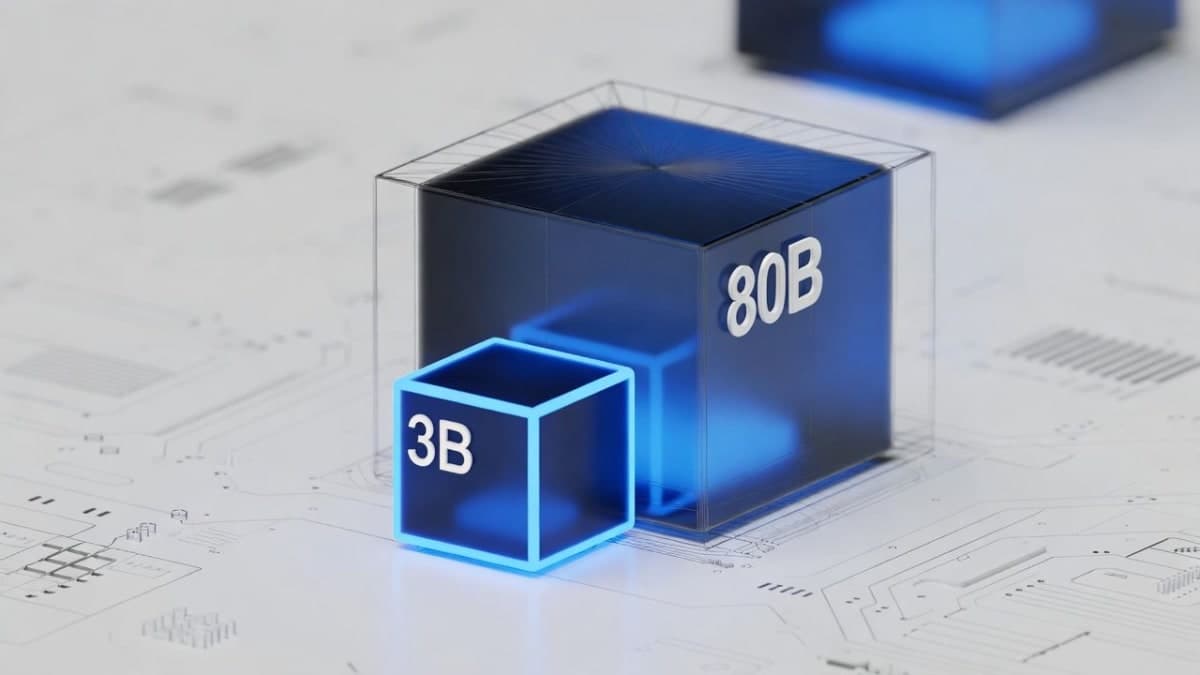

SERA-32B solves 49.5% of these at 32K context and 54.2% at 64K context. The smaller SERA-8B manages 29.4%, which doesn't sound impressive until you compare it against SkyRL-Agent-8B's 9.4% using the same Qwen 3 8B base.

The numbers matter less than the comparison set. At 32K context, SERA-32B sits within a percentage point of Devstral Small 2 (50.0%) and GLM-4.5-Air (50.5%). Devstral Small 2 is Mistral's entry in this space, and GLM-4.5-Air has over 100 billion parameters. SERA uses 32 billion.

The training economics are the real story

Most coding agent training involves either reinforcement learning with execution environments or carefully verified synthetic data. Both require substantial infrastructure. The RL path needs sandboxed environments, distributed training clusters, and rollout orchestration. Verified synthetic data means generating code, running tests, and filtering for correctness.

SERA skips most of this with what Ai2 calls "Soft Verified Generation." Here's the approach: a teacher model makes changes to a codebase starting from a randomly selected function. Those changes get converted into a synthetic pull request description. Then the teacher attempts to reproduce the change given only that description. The patches are compared using line-level recall rather than test execution.

The key insight is that patches don't need to be fully correct to be useful training data. The researchers found that high-fidelity synthetic data should mirror developer workflow rather than patch correctness. This eliminates the test infrastructure bottleneck.

Total training cost to reproduce the main results: roughly $400 on commodity hardware. To match top open-weight models like Devstral Small 2: around $12,000. Both figures come from Ai2's tech report, which includes all training code and 200,000 synthetic trajectories.

Repository specialization

The more interesting application might be private codebases. SERA can be fine-tuned on 8,000 synthetic trajectories from a specific repository for about $1,300. On Django and SymPy, specialized 32B models matched or exceeded the 110B parameter teacher model (GLM-4.5-Air) that generated the training data.

At 32K context, Django-specialized SERA hit 52.23% compared to GLM-4.5-Air's 51.20%. The SymPy numbers show the same pattern: 51.11% versus 48.89%.

A model one-third the size of its teacher, trained on synthetic data that teacher generated, outperforming that teacher on specific domains. That's the part worth paying attention to.

Claude Code integration

The release includes sera-cli, a proxy that connects SERA to Claude Code. The setup is genuinely minimal:

uv tool install ai2-sera-cli

sera --modal

The proxy handles translation between Claude Code's tool format (Read, Edit, Write, Bash) and SWE-agent's format (str_replace_editor, bash) that SERA was trained on. Path normalization is included. First run takes about 10 minutes to download 65GB of model weights; subsequent runs start in a couple minutes.

For teams, deploy-sera creates persistent vLLM deployments on Modal that stay up until explicitly stopped. Endpoints and API keys can be shared across team members.

What's actually open

Everything:

- Model weights on Hugging Face under Apache 2.0

- Training code on GitHub

- 200,000 synthetic trajectories

- Claude Code integration tooling

- The full recipe for generating your own data from any repository

The Apache 2.0 license means commercial use with no revenue thresholds.

The context window caveat

SERA was trained at 32K context and shows the expected performance drop at longer contexts. At 128K, it falls further behind Devstral Small 2's 64.8%. The researchers acknowledge this: performance gains from extended context require training at those lengths.

Who built this

The paper lists five authors, but Ai2 notes SERA was "built largely by a single Ai2 researcher" using 2 GPUs for data generation and up to 16 for training the larger models. Tim Dettmers is the senior author, previously known for work on quantization methods that make large models run on smaller hardware.

The cost breakdown matters for reproducing results. At $400 to reach SkyRL baselines and $2,000 for the full SERA-32B training, this sits within reach for individual researchers. Academic labs that couldn't touch RL-based agent training now have a path.

Honest limitations

The model sometimes tries to call a nonexistent "submit" tool when finished, a training artifact from SWE-bench style tasks. The CLI proxy handles this. Performance has only been validated on Python repositories. Generalization to other languages is unknown. And performance remains bounded by teacher model capability, so SERA can't exceed what GLM-4.6 could theoretically achieve.

NVIDIA collaborated on inference optimization. On 4xH100 GPUs in BF16, SERA achieves roughly 1,950 peak output tokens per second. FP8 pushes that to 3,700. On next-gen Blackwell B200 systems with NVFP4, the number reaches 8,600.

These are datacenter configurations. The 8B model fits on a single RTX 4090. The 32B needs an 80GB card minimum.

The Devstral comparison

Mistral's Devstral Small 2, released in December, hits 68% on SWE-Bench Verified. Their larger Devstral 2 (123B parameters) reaches 72.2%. But Devstral Small 2 uses 24B parameters and was trained with substantially more resources than SERA's $400-$12,000 range.

The comparison that matters: SERA-32B at 32K context (49.5%) versus Devstral Small 2 at 32K context (50.0%). Within error bars. At 64K, Devstral pulls ahead.

Devstral uses a dense architecture; SERA fine-tunes Qwen 3, which is dense. Neither uses mixture-of-experts at the model level. Qwen3-Coder, the current open-source leader, is a 480B MoE that activates 35B parameters per token.