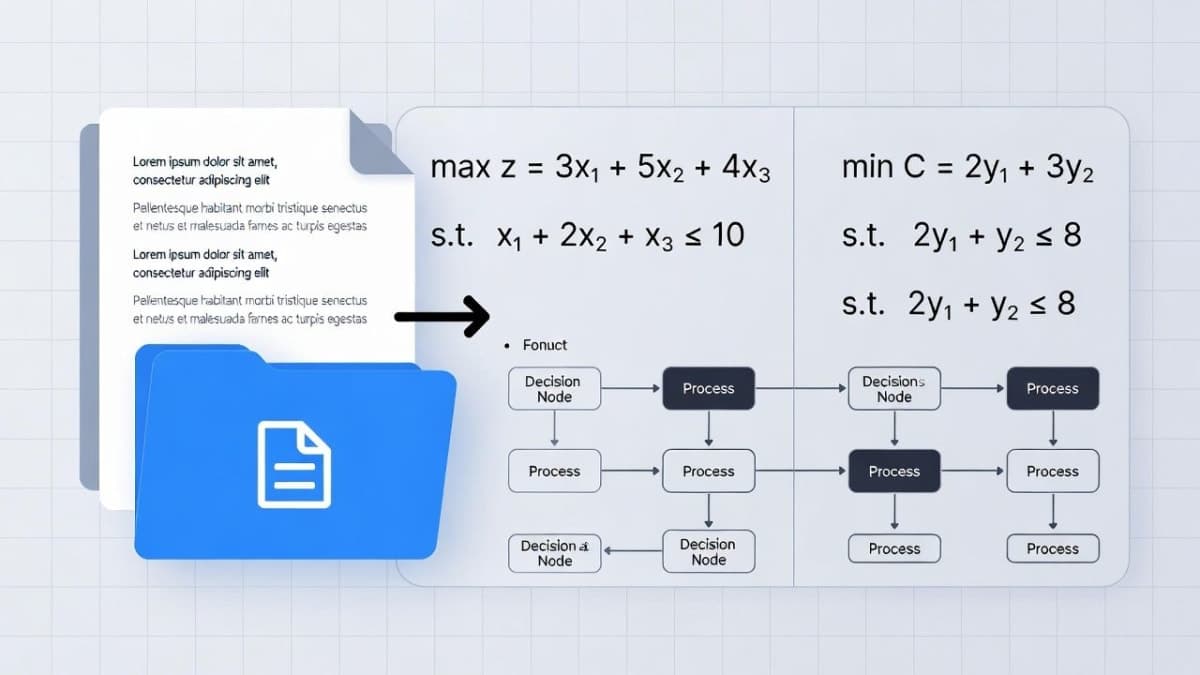

Writing optimization models is slow. Defining objectives, constraints, and variables for problems like supply chain routing or production scheduling can take experienced teams days or weeks. Microsoft Research built OptiMind to shortcut that process.

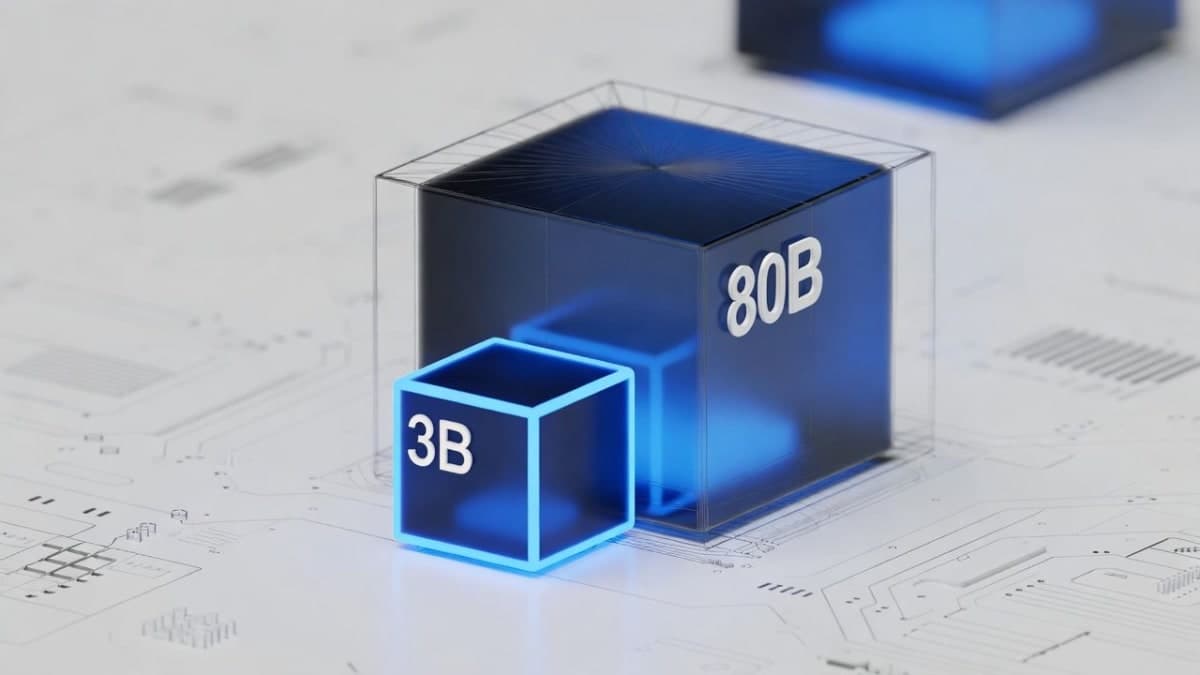

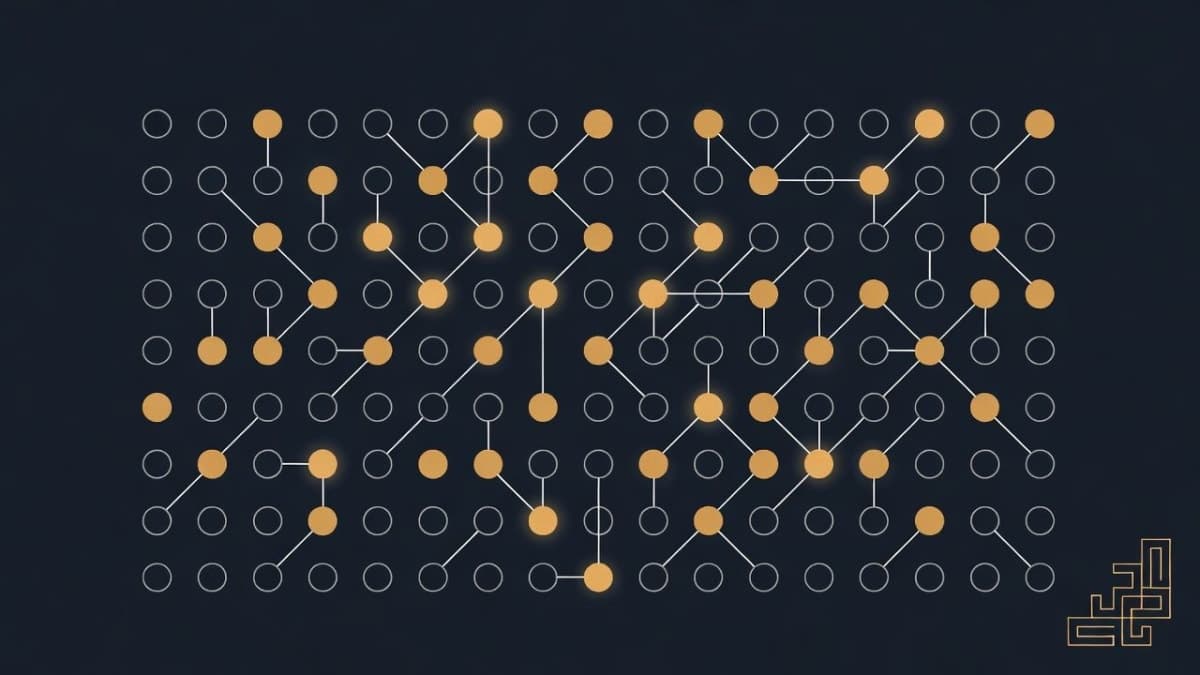

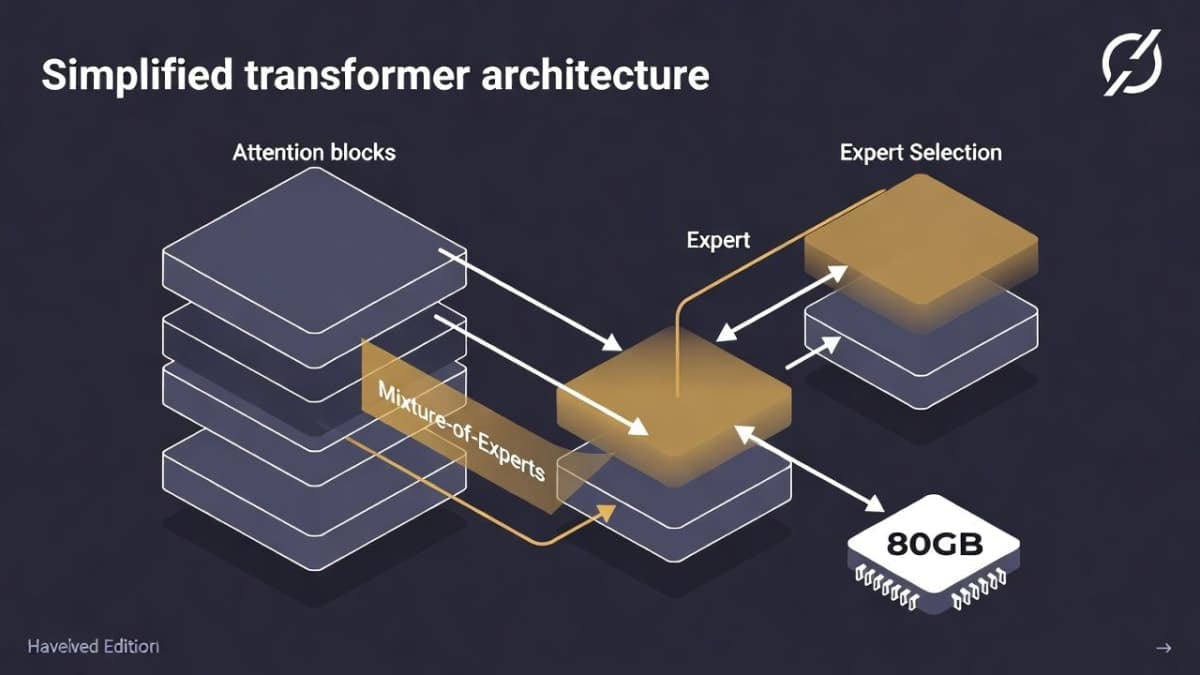

The model takes natural language problem descriptions and outputs mathematical formulations compatible with Gurobi, a standard optimization solver. It's a mixture-of-experts architecture with 20 billion total parameters but only 3.6 billion active at inference, small enough to run locally. The 128K context window handles complex multi-constraint problems. Microsoft trained it in about 8 hours on B200 GPUs using cleaned versions of public operations research datasets, then released it under MIT license.

The interesting part isn't the model size. According to Microsoft's research blog, 30-50% of existing public optimization benchmarks contained flawed or incomplete solutions. The team developed a semi-automated cleaning process that categorizes problems by type (scheduling, routing, network design) and identifies common error patterns within each class. At inference, OptiMind uses these same class-specific hints to guide its outputs and avoid known mistakes. On cleaned benchmarks, the company reports accuracy improvements of 13-21% over the base model and competitive results against much larger systems, though these are self-reported figures.

The technical paper and evaluation code are on GitHub. Microsoft Foundry hosts a preview for enterprise testing.

The Bottom Line: A specialized model that targets formulation bottlenecks rather than solver performance, with the training data cleanup potentially more valuable than the model itself.

QUICK FACTS

- 20B total parameters, 3.6B active (MoE architecture)

- 128,000 token context length

- MIT license, weights on Hugging Face

- Outputs GurobiPy-compatible code

- Trained October 2025, released November 2025

- 13-21% accuracy improvement over base model (company-reported)