Zyphra, the Palo Alto startup behind the Zamba hybrid SSM models, published a paper on February 3rd describing what it calls Online Vector-Quantized Attention, or OVQ. The work, led by researcher Nick Alonso with supervision from Tomas Figliolia and Beren Millidge, tries to thread a needle that the field has been poking at for years: how do you get self-attention's recall ability without its memory bill?

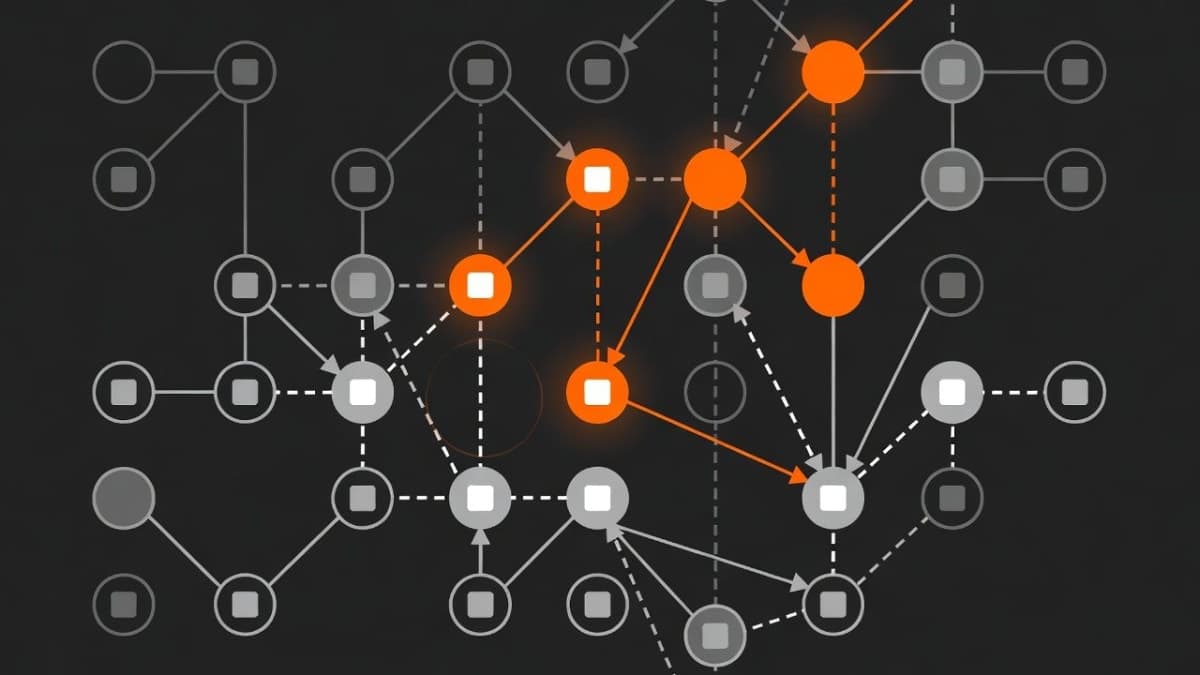

The answer, according to Zyphra, involves replacing the ever-growing KV cache with a dictionary of centroids that learns on the fly. Think k-means clustering, but running inside the attention layer during inference.

The problem OVQ is actually solving

There's a well-known trilemma in sequence mixing. Full self-attention is great at recall and in-context learning but costs quadratic compute and linearly growing memory. Linear attention variants (Mamba, RWKV, RetNet and friends) flip that, giving you constant memory and linear compute, but they struggle badly when you need to retrieve specific information from deep in the context. Something about compressing everything into a fixed-size state just loses too much.

The paper frames this nicely through the lens of catastrophic forgetting. Linear attention's state update works like an MLP trained with gradient descent on non-IID data: new information overwrites old information because every update touches the entire state. This is a known pathology in continual learning, and it is a partial explanation for why models like Mamba choke on needle-in-a-haystack tasks at long contexts.

OVQ's bet is that sparse updates sidestep this. Instead of smearing each new token across the full state, OVQ finds the single closest centroid and nudges it. Everything else stays put.

How the clustering actually works

The mechanism builds on an earlier idea called Transformer-VQ from Lingle in 2023, which replaced keys with their nearest centroids from a learned codebook. That original approach had the right shape (constant memory, linear compute) but terrible performance on anything requiring actual long-context ability. The Zyphra paper shows VQ-attention falling apart even before reaching its 4K training length on a simple key-value retrieval task, and adding more centroids barely helped.

OVQ's fix is to make the dictionary adaptive. Rather than learning centroids during training and freezing them, OVQ updates both key and value dictionaries during the forward pass itself. Each incoming token gets matched to its nearest centroid, and that centroid (and only that centroid) gets updated via an exponential moving average. The theoretical backing comes from Gaussian mixture regression, which is a detail I appreciate even if the connection feels somewhat stylized.

The state grows asymptotically toward a hard upper bound. Early in a sequence, new tokens are more likely to trigger the creation of new centroids (when nothing in the dictionary is close enough). As the dictionary fills up, it shifts to refinement mode, adjusting existing entries rather than adding new ones. After hitting the cap, memory stops growing entirely.

What the experiments show

The results are from synthetic tasks and language modeling, not real-world applications. I want to be upfront about that, because synthetic benchmarks are where these ideas always look good.

That said, some numbers are worth attention. A model trained on 4K tokens extrapolated cleanly to 64K on in-context recall tasks, while using a state size roughly 10-25x smaller than full self-attention would require. The original VQ-attention collapsed well before 4K on the same task.

The in-context learning results are where OVQ separates itself most clearly from the original VQ approach. On a linear regression ICL task (learning to approximate a function from examples provided in-context), vanilla VQ-attention failed completely. OVQ matched self-attention performance using approximately 4K centroids. That's a big gap to close, and I'm not sure the theoretical framework fully explains why the online updates help so much here versus in simpler recall.

Comparisons against Mamba2 and delta networks also favor OVQ for context stability. The paper argues this comes from the sparsity of updates, preventing the kind of wholesale state corruption that gated recurrences can suffer from. It's a plausible story. Gated DeltaNet, a recent approach from NVIDIA that combines Mamba2's gating with targeted delta updates, tries to solve a similar problem from the opposite direction, using dense states with smarter forgetting rather than sparse states with minimal forgetting.

What's not in the paper

The models tested are small. The paper doesn't show results at the scale where any of this would matter for production systems. OVQ was tested on architectures interleaving sliding window attention with OVQ layers, not on standalone OVQ models at 7B or 70B parameters. Whether the clustering approach holds up when the key space becomes vastly higher-dimensional and the distribution of queries gets more complex is genuinely unclear.

There's also the overhead question. The paper reports 6.7% computational overhead from the online dictionary updates, but that number comes from "heavily optimized custom kernels." If you're trying to reproduce this with standard PyTorch, expect worse. Maybe much worse. The gap between "works in our optimized setup" and "works in yours" is a recurring theme in efficient attention research.

And then there's positional in-context recall, where the paper acknowledges OVQ performs "slightly worse" than full self-attention. Slightly worse on a controlled benchmark has a way of becoming meaningfully worse in the wild.

Why this is interesting anyway

What caught my attention isn't the specific numbers but the framing. Most efficient attention research tries to approximate self-attention more cheaply. OVQ is doing something different: it's treating the memory state as an associative memory trained via online clustering, drawing on ideas from computational neuroscience (Alonso's background is a PhD in cognitive science from UC Irvine, and he's published on Hopfield-like networks for continual learning). The connection between sparse updates in k-means-style clustering and resistance to catastrophic forgetting is well-established in that literature, even if applying it inside a transformer attention layer is new.

The memory state growing toward a hard ceiling is an elegant property. You get to pick your budget at deployment time and the model adapts, with larger allocations consistently giving better performance. That's a nicer knob to turn than the binary choice between "full KV cache" and "hope the compression doesn't break something important."

Whether OVQ leads anywhere practical depends on scaling experiments that don't exist yet. Zyphra's track record with hybrid architectures (Zamba, BlackMamba) suggests they'll follow through, but a paper on synthetic benchmarks at small scale is a starting point, not a destination.

The FTC has no say on this one. Zyphra just needs to train a bigger model.