Researchers from Tsinghua University and Microsoft have released X-Coder, a 7-billion parameter coding model trained without a single line of human-written training data. The arXiv paper, posted in January, details a model that scores 62.9% on LiveCodeBench v5 and 55.8% on v6, beating models with twice its parameter count.

The "no real data" bet

The pitch here is straightforward, even if the implications aren't: you can build a competitive coding model using nothing but machine-generated problems, solutions, and test cases. X-Coder's training pipeline, called SynthSmith, starts by extracting coding features (algorithms, data structures, optimization patterns) from about 10,000 seed examples, then expands that pool to roughly 177,000 entries through an evolutionary process. From there, it synthesizes entirely new competitive programming tasks.

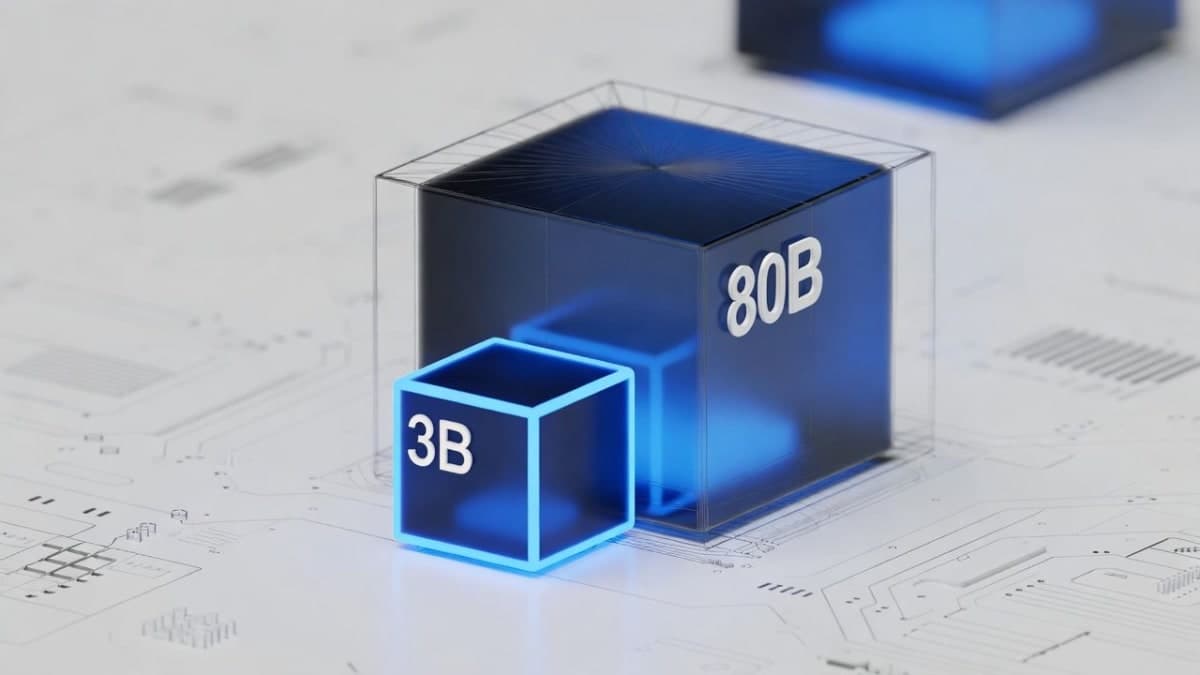

"Scaling laws hold on our synthetic dataset," the authors write in the paper, which is the kind of claim that deserves scrutiny. But the benchmark numbers, at least, are hard to argue with. On LiveCodeBench v5, X-Coder's 7B model hit 62.9% pass rate (averaged across 8 attempts), outperforming both DeepCoder-14B-Preview and AReal-boba2-14B. Those are 14B models. Double the parameters.

What the benchmarks don't say

The interesting finding isn't the topline score. It's how the model gets there, and what that tells us about data quality versus quantity.

The team ran scaling experiments with the SynthSmith pipeline and found something counterintuitive: task variety matters more than solution variety. A dataset with 64,000 unique tasks (one solution each) outperformed datasets with fewer tasks but multiple solutions per problem. Pass rates climbed from 43.7% at 32,000 tasks to 62.7% at 192,000 tasks, a progression that looks like a clean log curve. Almost suspiciously clean, though the team's comparisons against their own ablations make the trend credible enough.

Reinforcement learning added another 4.6 percentage points on top of supervised fine-tuning. Not nothing, but the heavy lifting was clearly in the SFT stage.

One data point worth lingering on: the Qwen3-8B base model (which X-Coder builds on) scored 88.1 on an older LiveCodeBench version but dropped to 57.5 on the newer one. X-Coder shows a smaller gap of about 17 points between versions. The team frames this as evidence that synthetic training reduces benchmark contamination. Maybe. Or maybe it just means the model hasn't been trained on the kinds of problems that leak into older benchmarks. Either interpretation favors synthetic data, which is the point.

Can you actually use it?

The full stack is open. The GitHub repo contains the SynthSmith pipeline code, training recipes for both SFT and RL stages, and Docker configurations. Datasets are available on HuggingFace under the IIGroup organization: a 376K-example SFT dataset and a 40K-example RL dataset (roughly 17GB total). The model itself is built on Qwen3's architecture.

Training required 128 Nvidia H20 GPUs for 220 hours (supervised fine-tuning) and 32 H200 GPUs over seven days (reinforcement learning). Not cheap, but not unreasonable for an academic lab with Microsoft's backing. Whether independent researchers can replicate this depends less on the code and more on GPU access, as usual.

The team behind X-Coder comes from Tsinghua's Intelligent Interaction Group (IIGroup), with co-authors from Microsoft Research Asia and Wuhan University. Lead author Jie Wu, a master's student at Tsinghua, has noted that computational constraints, not pipeline limitations, are the main barrier to scaling further.

Datasets and code are live now. Model weights, according to coverage from late January, are planned for release but availability should be confirmed on the IIGroup HuggingFace page directly.