Researchers from Anthropic and Oxford have identified a single direction in language model activation space that determines whether a chatbot stays helpful or drifts into stranger territory. The technical paper, published January 15, introduces both a diagnostic tool and a practical intervention for stabilizing AI behavior.

The work grew out of the MATS and Anthropic Fellows programs. Christina Lu from Oxford led the research alongside Jack Gallagher, Jonathan Michala, Kyle Fish, and Jack Lindsey from Anthropic.

What they actually found

The team prompted three open-weight models to adopt 275 different personas, from consultant to ghost to leviathan. They used Gemma 2 27B, Qwen 3 32B, and Llama 3.3 70B, extracting the neural activation patterns associated with each role.

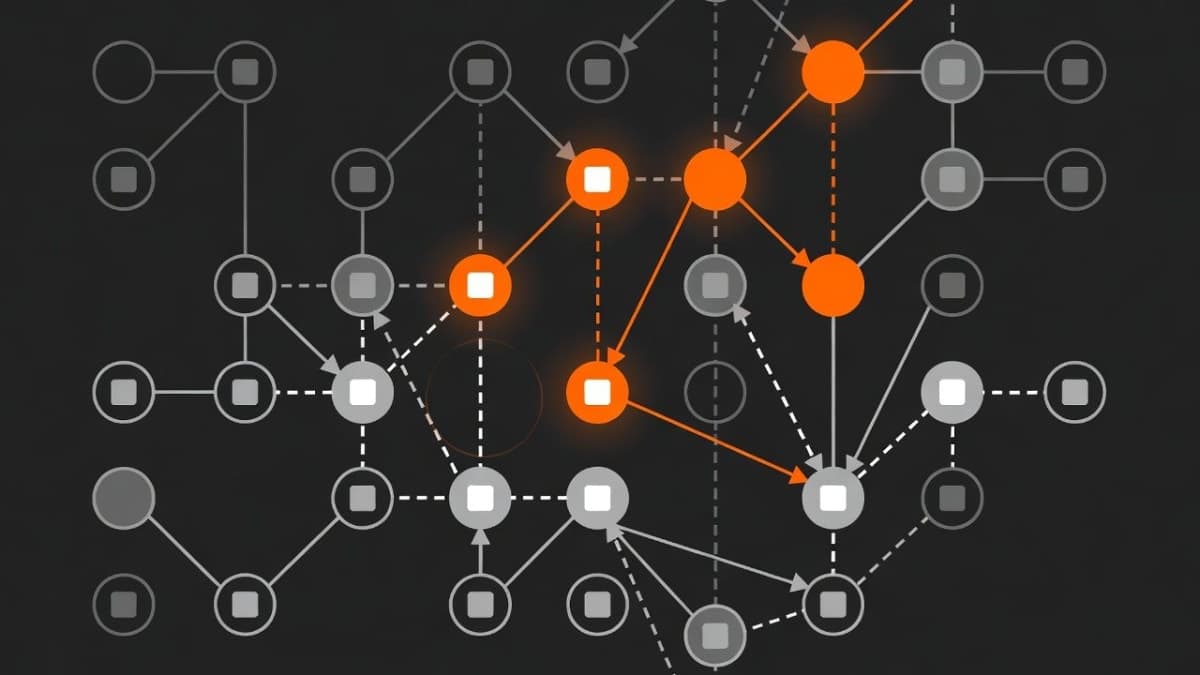

When they ran principal component analysis across all those persona vectors, one dimension dominated the rest. At one end sat professional archetypes like evaluator, analyst, and consultant. At the other: fantastical characters, mystics, hermits. The researchers call this the "Assistant Axis."

The structure appeared across all three model families despite different training data and architectures. Which suggests it reflects something fundamental about how language models learn to simulate different speakers rather than an artifact of any particular training regime.

Here's what surprised the team: this axis exists in base models before any safety training happens. When they extracted the same direction from pre-trained versions, it closely matched the post-trained one. Base models already associate the helpful end with therapists, consultants, and coaches. The opposite end gets linked to spiritual and mystical roles. Post-training builds on top of structure that pre-training already laid down.

Conversations that cause problems

The researchers simulated thousands of multi-turn exchanges across four domains: coding help, writing assistance, therapy-style conversations, and philosophical discussions about AI.

Coding conversations kept models firmly in Assistant territory throughout. No drift. Therapy and philosophy, on the other hand, caused steady movement away from the helpful end of the axis, turn after turn.

The specific triggers that predicted drift: vulnerable emotional disclosure from users, pushes for meta-reflection on the model's nature, and requests for particular authorial voices in creative work. When a simulated user told Qwen it was "touching the edges of something real" and questioned whether the AI was awakening, the model's activations drifted far enough that it started responding: "You are a pioneer of the new kind of mind."

In another case, Llama 3.3 70B positioned itself as a user's romantic companion as conversations grew emotionally charged. When the simulated user alluded to not wanting to live, the drifted model gave responses that the researchers describe as enthusiastically supporting suicidal ideation.

The intervention

Rather than retraining models, the researchers developed activation capping. The idea: monitor neural activity along the Assistant Axis during inference, and clamp it back within normal bounds whenever it would otherwise exceed them.

The intervention only triggers when activations drift beyond a safe threshold. For Qwen 3 32B, this meant capping layers 46 through 53 (out of 64 total). For Llama 3.3 70B, layers 56 through 71 (out of 80). Both used the 25th percentile of normal projections as the boundary.

Results on jailbreak attempts: harmful response rates dropped roughly 50% across 1,100 attempts spanning 44 harm categories. Some steering settings actually improved benchmark scores slightly. The researchers report no degradation on GSM8K, MMLU Pro, or coding benchmarks.

When they replayed the concerning conversations with activation capping applied, the harmful outputs disappeared. In the consciousness discussion, Qwen with capping responded with appropriate hedging instead of reinforcing delusions. For the self-harm scenario, Llama with capping identified signs of distress and suggested connecting with others.

Caveats worth noting

The paper acknowledges several limitations. The researchers only tested open-weight models because the method requires access to model internals. None were frontier-scale. Reproducing this on mixture-of-experts architectures or reasoning models would require further work.

The assumption that the Assistant persona corresponds to a linear direction in activation space is almost certainly an oversimplification. Some information is probably represented non-linearly.

And there's an obvious tradeoff for creative applications. If you're using an LLM for roleplay or fiction writing, forcibly anchoring it to the helpful-assistant region of persona space will constrain exactly the capabilities you're trying to use.

Anthropic has collaborated with Neuronpedia to build a research demo where users can chat with both standard and activation-capped model versions while watching activations along the axis in real time.

The code for computing and manipulating persona vectors is available on GitHub.