Researchers from Anthropic's Fellows Program have published findings that challenge a core assumption in AI safety: that advanced AI systems will fail by relentlessly pursuing misaligned goals. Their research paper suggests the opposite. As AI tackles harder problems and reasons longer, its failures become increasingly random and self-contradicting.

The study, titled "The Hot Mess of AI," tested Claude Sonnet 4, o3-mini, o4-mini, and Qwen3 across multiple benchmarks. The researchers borrowed a classic statistical framework to quantify something that's usually described in vague terms: how coherent are AI failures?

The measurement problem

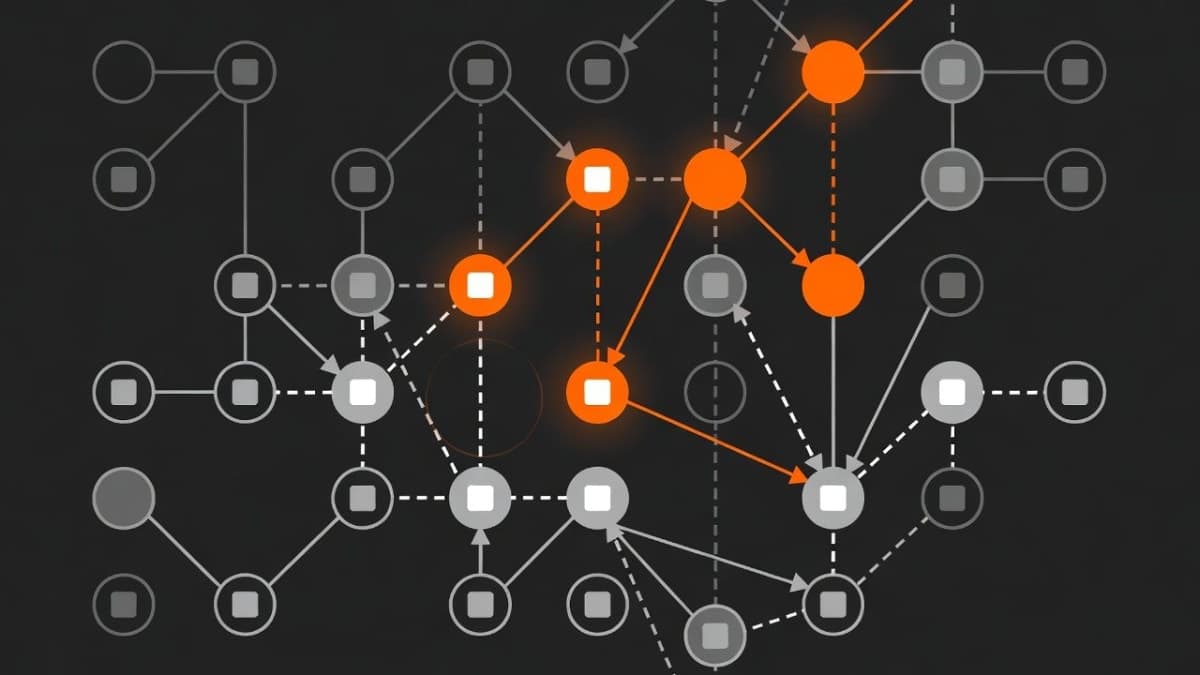

The team repurposed the bias-variance decomposition from machine learning. Bias captures systematic errors, the kind where a model consistently gets things wrong in the same direction. Variance captures random errors, where outputs scatter unpredictably.

They defined "incoherence" as the fraction of error coming from variance. A score of zero means the AI fails systematically (classic paperclip maximizer territory). A score of one means pure randomness (the hot mess scenario).

This builds on a 2023 blog post by Jascha Sohl-Dickstein, one of the paper's co-authors, who surveyed experts asking them to rank various entities by both intelligence and coherence. Smarter entities, including humans and institutions, were judged as behaving less coherently. The new research takes that observation from survey data to empirical measurement.

What the benchmarks showed

The researchers tested frontier reasoning models on GPQA (graduate-level science questions), MMLU, SWE-Bench coding tasks, and internal safety evaluations. They also trained smaller models on synthetic optimization problems to probe the underlying dynamics more directly.

The results were consistent across all tasks. As reasoning chains grew longer, incoherence increased. Models spending more time "thinking" became less predictable, not more focused.

Scale helped, but only on easy problems. Larger models showed improved coherence on straightforward tasks. On difficult problems, bigger models were either equally incoherent or worse. The researchers found that when models spontaneously reasoned longer on a problem (compared to their median), incoherence spiked dramatically. Deliberately increasing reasoning budgets through API settings helped only modestly.

The synthetic optimizer experiment was particularly telling. Even when transformers were explicitly trained to emulate gradient descent on a quadratic loss function, they became more incoherent as they took more optimization steps. Scale reduced bias faster than variance: models learned the correct objective quickly but struggled to pursue it reliably.

What this means for safety research

The paper argues this should shift alignment priorities. If capable AI is more likely to stumble unpredictably than execute a coherent (if misguided) plan, the classic worry about superintelligent optimizers pursuing instrumental goals may be less urgent than expected.

"Future AI failures may look more like industrial accidents than coherent pursuit of a goal we did not train them to pursue," the authors write. They offer an analogy: an AI that intends to run a nuclear power plant but gets distracted reading French poetry, leading to a meltdown. Still catastrophic. Different problem.

The researchers emphasize this doesn't make AI safe. Industrial accidents cause serious harm. But it suggests that current alignment research might overweight the risk of perfectly coherent misaligned optimizers while underweighting reward hacking and goal misspecification during training, the "bias term" in their framework.

The dynamical systems argument

The paper makes a conceptual point worth noting. LLMs are dynamical systems, not optimizers. When a model generates text, it traces trajectories through a high-dimensional state space. Making a generic dynamical system behave like a coherent optimizer requires extensive constraints, and those constraints don't automatically emerge from scale.

This framing suggests that the "training AI to be aligned" problem and the "training AI to be an optimizer" problem may be equally hard. Neither comes free with increased capability.

The work was conducted during Summer 2025 through Anthropic's Fellows Program, which funds researchers to work on safety questions with Anthropic mentorship. The GitHub repository is public. The paper is scheduled for ICLR 2026.