Sakana AI, the Tokyo-based research lab, released a technical report in December describing RePo, a method that lets language models assign learned positions to tokens rather than relying on fixed sequential indices. The approach adds less than 1% compute overhead and shows consistent gains on tasks where standard positional encoding struggles.

The Flat Sequence Problem

Transformers read input as a single line of tokens. Position 0, position 1, position 2, all the way down. The model's only structural signal is this integer index, typically encoded through methods like RoPE (Rotary Position Embedding), which rotates query and key vectors based on token distance.

This works fine for many tasks. Natural language tends to have local dependencies. But modern prompting scenarios increasingly break this assumption. Retrieval-augmented generation stuffs relevant passages between irrelevant ones. Tables get linearized into text. Critical information sits thousands of tokens away from where the model needs to use it.

The Sakana team frames this through Cognitive Load Theory, borrowed from educational psychology. Working memory is limited. When you waste capacity on clutter, you have less left for actual reasoning. The argument: LLMs face a similar bottleneck when their attention has to wade through noise to find what matters.

How RePo Works

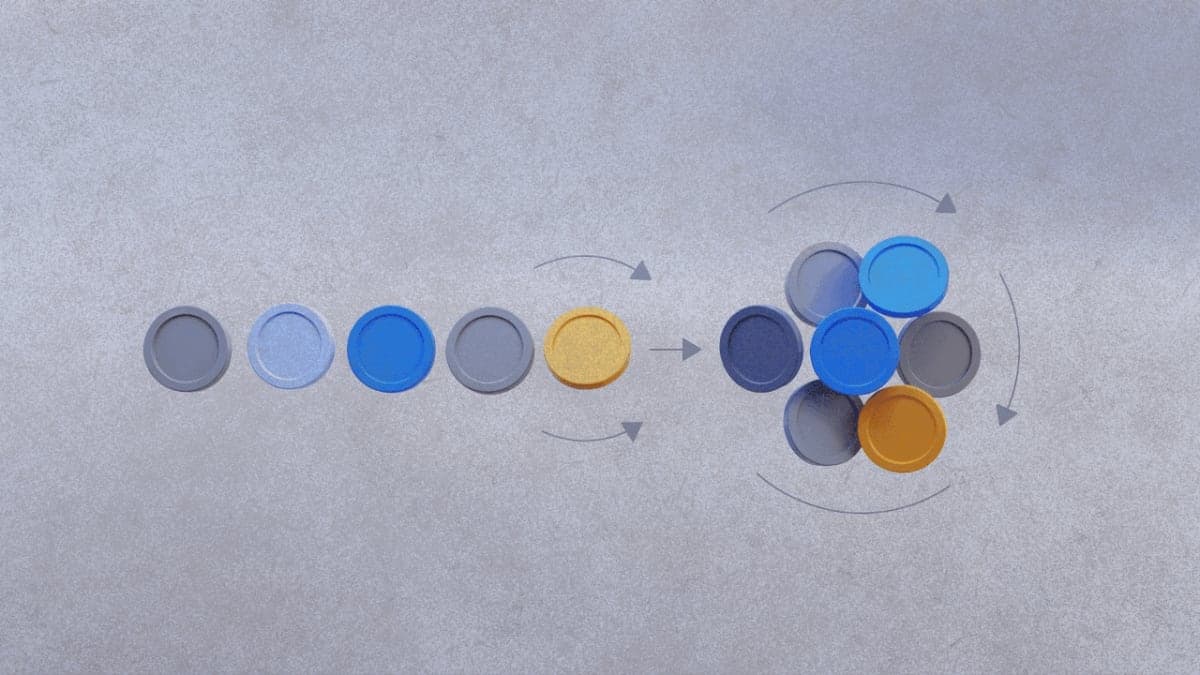

The fix is conceptually simple. Instead of hardcoding position as the token's index in the sequence, let the model learn what position each token should have.

For each token, a small network predicts a real-valued position from the token's hidden state. These learned positions then get plugged into the standard positional encoding machinery. Tokens that are semantically related can end up closer together in position space even if they were far apart in the original prompt.

The GitHub implementation shows the module gets applied starting around layer 5, roughly one-third into the network depth. Each attention head learns its own position assignments, though the underlying position representation is shared across heads in the same layer.

Training is end-to-end. No special pretraining phase. The RePo parameters just get optimized alongside everything else.

What the Benchmarks Show

The team continually pretrained OLMo-2 1B, Allen AI's fully open language model, for 50 billion tokens with a 4,096 context window. They compared against several baselines: standard RoPE, NoPE (no positional encoding at all), and hybrid approaches that interleave RoPE and NoPE layers.

Three evaluation categories, each targeting a scenario where rigid positions should hurt:

Noisy context. The RULER benchmark injects irrelevant content around the information the model needs. RePo beats RoPE by 11 points on average here. The gain on the needle-in-a-haystack subset specifically: 88.25 versus 82.56.

Structured data. When graphs and tables get flattened into text, the original structure is destroyed. On NLGraph and HybridQA, RePo shows a 1.94 point improvement over RoPE. Though the paper notes NoPE actually performs best on the graph dataset, which suggests the locality bias in positional encodings might actively hurt when dealing with graph-structured inputs.

Long context. Testing at 8K and 16K tokens, lengths never seen during training. The gap widens as context grows. On LongBench, RePo hits 28.31 versus RoPE's 21.07.

The general capability benchmarks tell a different story. ARC, HellaSwag, MMLU-Pro, TriviaQA: RePo matches RoPE within a point across the board. It doesn't hurt standard performance to gain the specialized improvements.

What the Model Actually Learns

The analysis section digs into attention patterns. On needle-in-a-haystack tasks, RePo allocates more attention mass to the distant-but-relevant needle tokens (2.013 versus 1.754 for RoPE) while pulling attention away from nearby query tokens. It's doing what you'd hope: focusing resources on what matters rather than what's close.

The learned position spaces turn out to be dense and nonlinear. Different attention heads specialize to different position ranges. Some do local reorganization. Others do global. The visualizations in the interactive demo show patterns like plateaus (many tokens mapped to similar positions) and abrupt jumps.

The Caveats

This was tested on a 1B parameter model. The paper doesn't claim the approach scales to larger sizes, and given the compound nature of attention dynamics, I'd want to see evidence at 7B+ before assuming it generalizes.

The 1% overhead figure is for their optimized implementation. If you're trying to replicate this in vanilla PyTorch without their infrastructure, expect worse numbers.

And the Cognitive Load Theory framing is evocative but unverifiable. Whether LLMs actually experience something analogous to human working memory constraints is a philosophical question the benchmarks can't answer. The empirical results stand on their own; the theoretical motivation is window dressing.

Where This Fits

RePo joins a growing family of approaches trying to make transformers more flexible about position. YaRN extends context through angle scaling. NoPE variants drop positional encoding entirely from some layers. Sakana's own DroPE work explores dropping positional embedding to extend context.

What's different here is that the model learns to reorganize rather than just learning to cope with fixed organization. It's a step toward models that can structure their own working memory rather than having structure imposed by architecture.

The pretrained model is available on Hugging Face. Whether the approach gets picked up by larger labs will depend on whether the gains hold at scale.